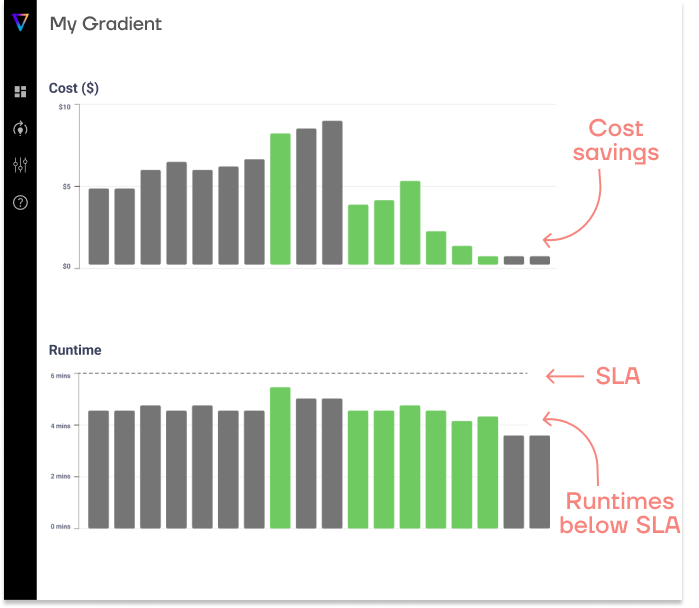

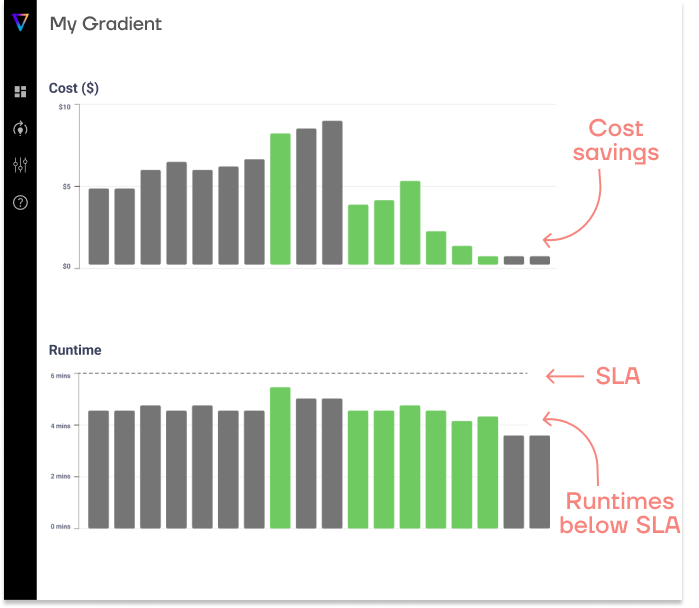

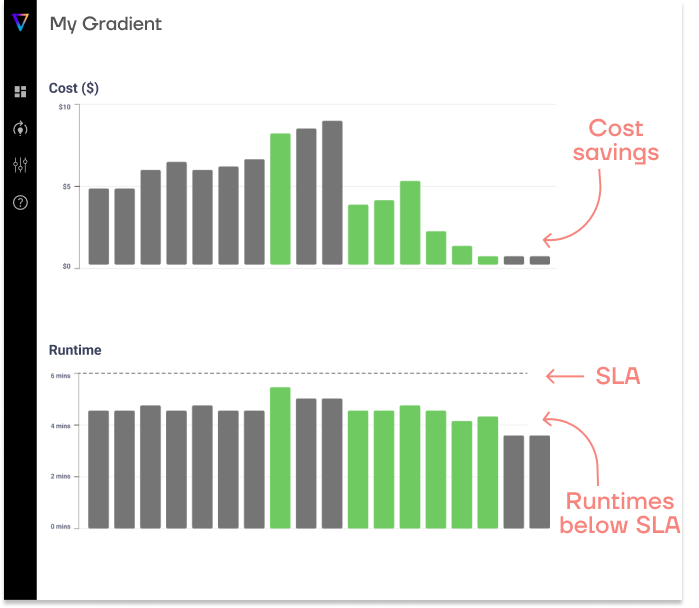

Save up to 50% on compute!

Save on compute while meeting runtime SLAs with Gradient’s AI compute optimization solution.

Since launching in 2013, Databricks has continuously evolved its product offerings from machine learning pipeline to end-to-end data warehousing and data intelligence platform.

While we at Sync are big fans of all things Databricks (particularly how to optimize cost and speed) we often get questions about understanding Databricks new offerings—particularly as product development has accelerated in the last 2 years.

To help in your understanding, we wrote this blog post to address the question, “What is Databricks Unity Catalog?” and whether users should be using it (the answer is yes). We walk through a precise technical answer, and then dive into the details of the catalog itself, how to enable it and frequently asked questions.

The Databricks Unity Catalog is a centralized data governance layer that allows for granular security control and managing data and metadata assets in a unified system within Databricks. Additionally, the unity catalog provides tools for access control, audits, logs and lineage.

Read Databricks’ overview of Unity Catalog here.

You can think of the unity catalog as an update designed to bridge gaps in the Databrick ecosystem—specifically to eliminate and improve upon third-party catalogs and governance tools. With many cloud-specific tools being used, Databricks brought in a unified solution for data discovery and governance that would seamlessly integrate with their Lakehouse architecture. Thus, while Unity Catalog was initially billed as a governance tool, in reality it streamlines processes across the board. While simplistic, it’s not wrong to say Unity Catalog simply makes everything Databricks run smoother.

Notably, the Unity Catalog is being offered by default on the Databricks Data Intelligence Platform. This is because Databricks believes the Unity Catalog is a huge benefit to their users (and we are inclined to agree!). If you have access to the Unity Catalog, we highly recommend enabling it in your workspace. As of June 2024, Databricks Unity Catalog is open-sourced, available here on GitHub.

Save on compute while meeting runtime SLAs with Gradient’s AI compute optimization solution.

The Unity Catalog benefits can be thought of in four buckets: data governance, data discovery, data lineage, and data sharing and access.

The unity catalog provides a structured way to tag, document and manage data assets and metadata. This allows for a comprehensive search interface that utilizes lineage metadata (including full lineage) history and ensures security based on user permissions.

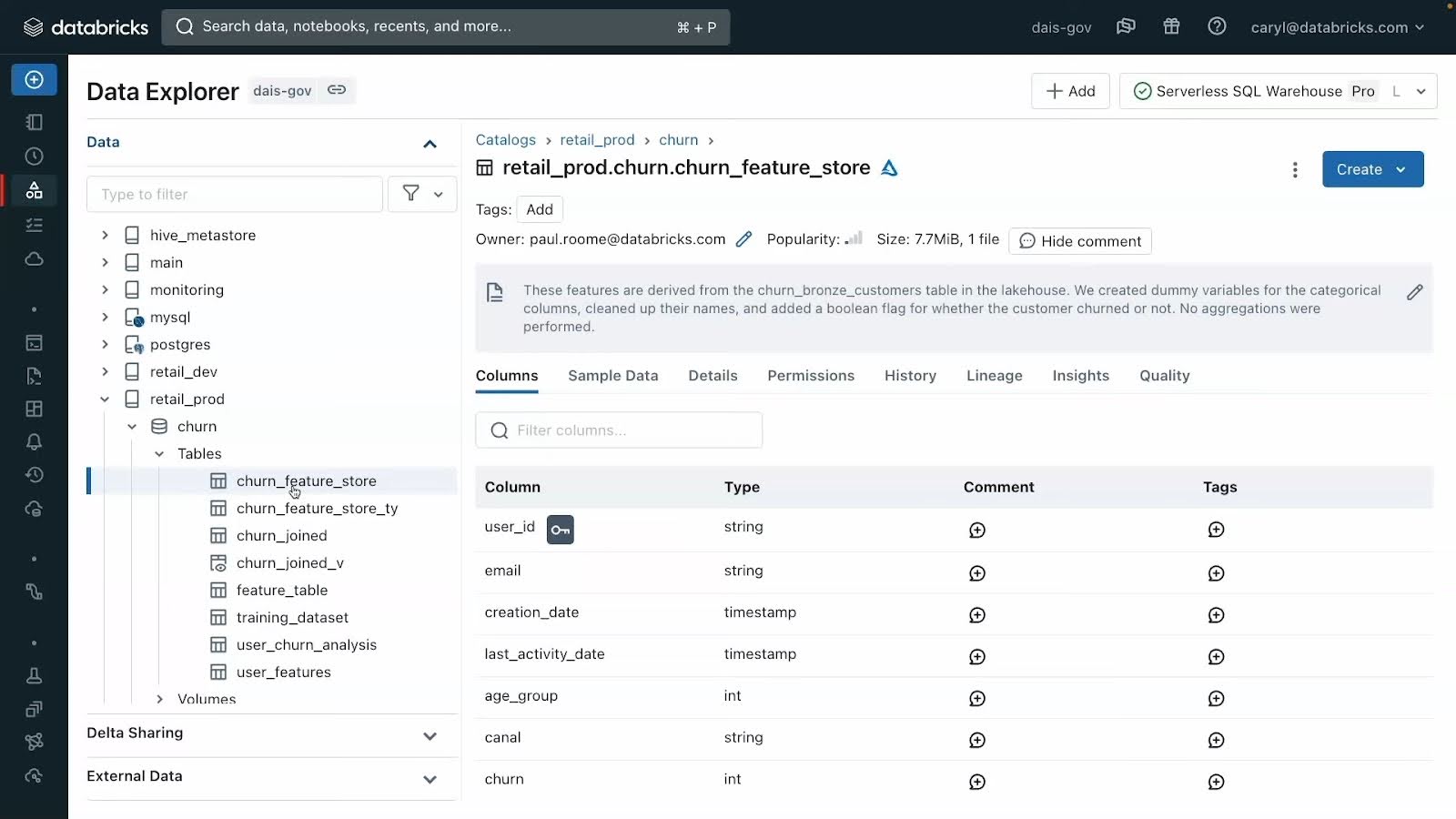

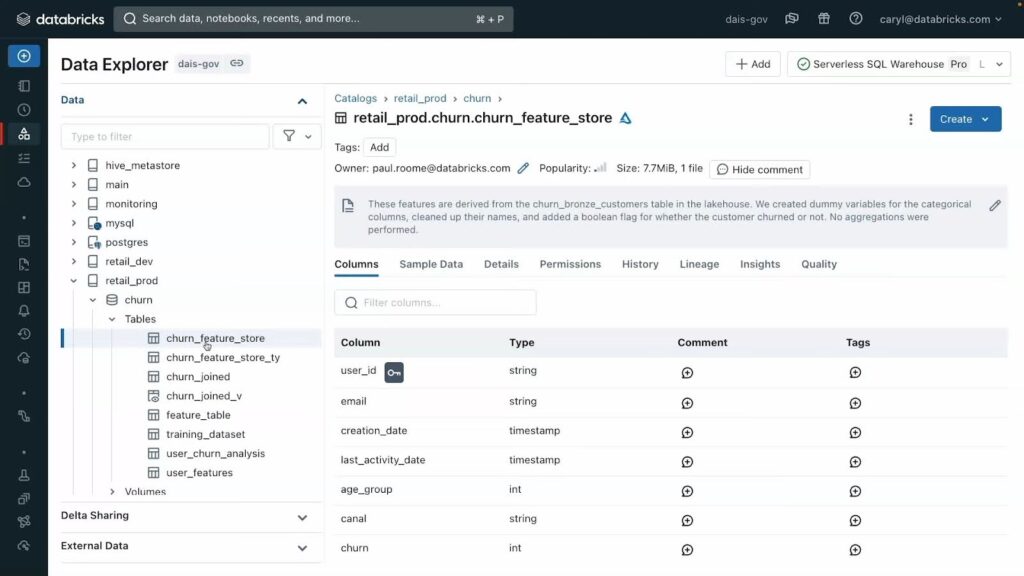

Users can either explore data objects through the Catalog Explorer, or parse through data using SQL or Python to query datasets and create dashboard from available data objects. In Catalog explorer, users can preview sample data, read comments and check field details (50 second preview from Databricks here).

A preview of the Catalog explorer for data discovery in Unity Catalog (via Databricks/Youtube)

Unity Catalog is a layer over all external compute platforms and acts as a central repository for all structured and unstructured data assets (such as files, dashboards, tables, views, volumes, etc). This unified architecture allows for a governance model that includes controls, lineage, discovery, monitoring, auditing, and sharing.

Unity Catalog thus offers a single place to administer data access policies that apply across all workspaces. This allows you to simplify access management with a unified interface to define access policies on data and AI assets and consistently apply and audit these policies on any cloud or data platform.

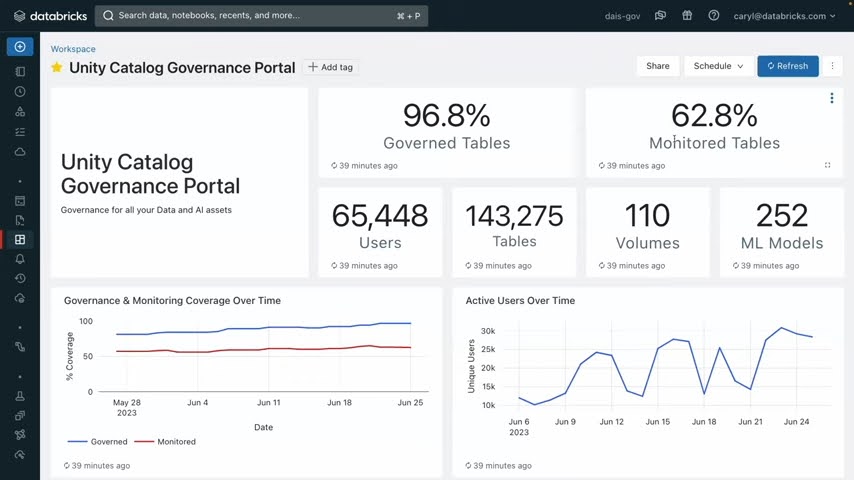

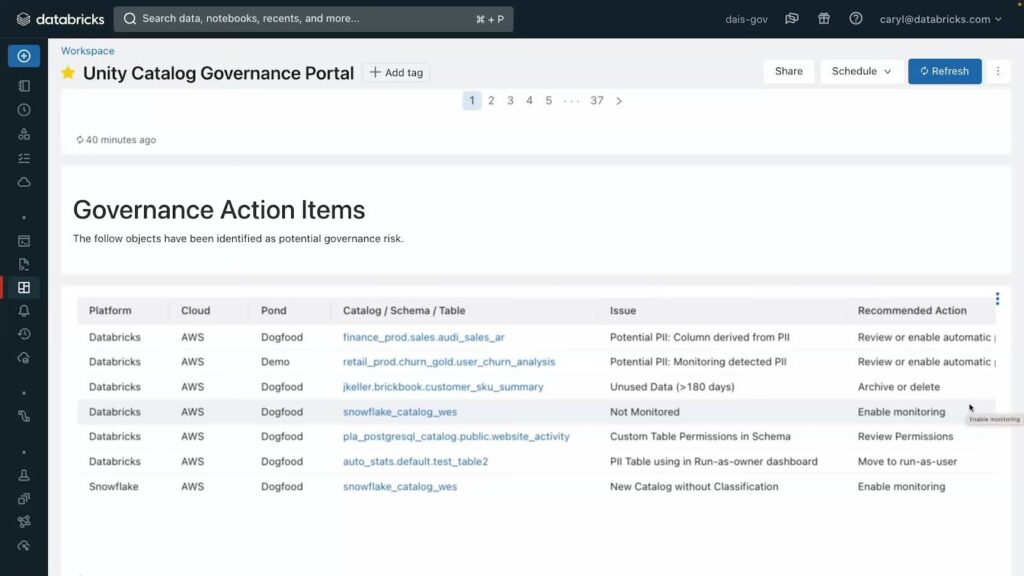

All of Databricks governance parameters can be accessed via their Unity Catalog Governance Portal. The Databricks Data Intelligence Platform leverages AI to best understand the context of tables and columns, the volume of which can be impossible for manual categorization. This also enables you to quickly assess how many of your tables are monitored via Lakehouse Monitoring — Databricks’s new “AI for Governance tool”.

A screenshot of the Unity Catalog Governance portal shows how their Lakehouse Monitoring uses AI to automatically monitor tables and alert users to uses like PII leakage or data drift (via Databricks/Youtube)

With Lakehouse monitoring you can also set up alerts that automatically detect and correct PII leakage, data quality, data drift and more. These auto alerts are contained within their own section of the Governance Portal, which shows when the issue was first detected, and where the issue first stemmed from.

A preview of the governance action items shows how issues are identified by cause and Catalog/Schema/Table. Digging further in will reveal the time and date of first incidence as well as it where it stems from.

It incorporates a data governance framework and maintains an extensive audit log of actions performed on data stored within a Databricks account.

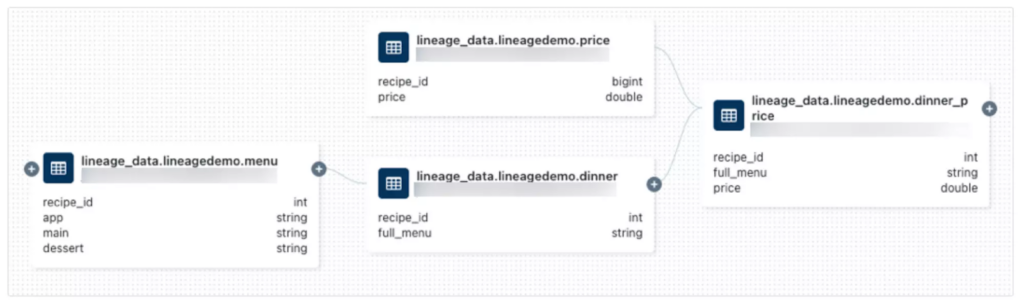

As the importance of Data Lineage has grown, Databricks has responded with end-to-end lineages for all workloads. Lineage data includes notebooks, workflows and dashboards and is captured down to the column level. Unity Catalog users can parse and extract lineage metadata from queries and external tools using SQL or any other language enabled in their workspace, such as Python. Lineage can be visualized in the Catalog Explorer in near-real-time and

Unity Catalog’s lineage feature provides a comprehensive view of both upstream and downstream dependencies, including the data type of each field. Users can easily follow the data flow through different stages, gaining insights into the relationships between field and tables.

An example of the metadata lineage within Unity Catalog

Like their governance model, Databricks restricts access to data lineage based on the logged-in users’ privileges.

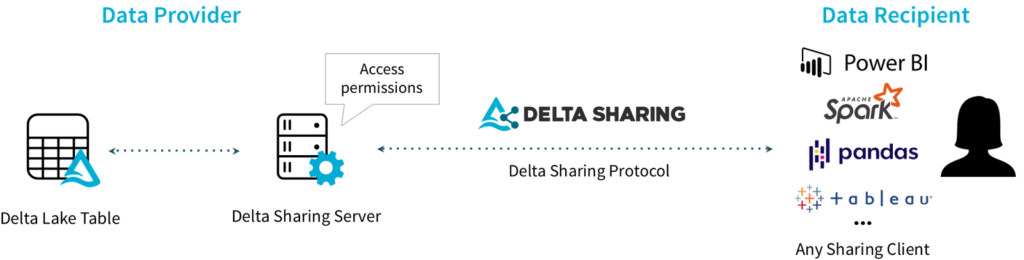

One of the most welcomed features of Databricks Unity Catalog is its built-in sharing method which is built on Delta Sharing, Databricks’ popular cloud-platform-agnostic open protocol for sharing data and managing permissions launched in 2021.

Within Unity Catalog you can access control mechanisms use identity federation, allowing Databricks users to be service principals, individual users, or groups. In addition, SQL-based syntax or the Databricks UI can be used to manage and control access, based on tables, rows, and columns, with the attribute level controls coming soon.

Databricks has a standards-complaint security model based on ANSI SQL and allows administrators to grant permissions in their existing data lake using familiar syntax, at the level of catalogs, databases (also called schemas), tables, and views.

Unity Catalog grants user-level permissions for Governance Portal, Catalog Explorer and for data lineages and sharing. Unity Catalog in effect has one model for safeguarding appropriating access across your full data estate with permissions, row level, and column level security.

It almost allows registering and governing access to external data sources, such as cloud object storage, databases, and data lakes, through external locations and Lakehouse Federation.

Yes, because Unity Catalog reduces both storage costs and fees for external licensing, it reduces cost compared to previous solutions. It also indirectly saves time by greatly reducing bottlenecks for ingesting data, reducing time spent on repetitive tasks by an average of 80% (according to Databricks). This all comes free and automatically enabled for all new users of the Databricks Data Intelligence Platform.

The following is a step-by-step guide to setting up and configuring Databricks Unity Catalog.

Unity Catalog works existing data storage systems and governance solutions such as Atlan, Fivetran, dbt or Azure data factory. It also integrates with business intelligence solutions such as Tableau, PowerBi and Qlik. This makes it simple to leverage your existing infrastructure for updated governance model, without incurring expensive migration costs (for a full list of integrations check out Databricks page here).

What if my workspace wasn’t enabled for Unity Catalog automatically?

If your workspace was not enabled for Unity Catalog automatically, an account admin or metastore admin must manually attach the workspace to a Unity Catalog metastore in the same region. If no Unity Catalog metastore exists in the region, an account admin must create one. For instructions, see Create a Unity Catalog metastore.

Save on compute while meeting runtime SLAs with Gradient’s AI compute optimization solution.

The following limitations apply for all object names in Unity Catalog:

For a full list of Unity Catalog Limitations, read the full documentation for the Unity Catalog.

While Unity Catalog offers compelling features for Databricks users, it’s important to understand how it compares to other prominent solutions in the market, particularly AWS Glue Data Catalog and Apache Atlas. Each of these platforms brings unique strengths to data governance and metadata management, though they differ significantly in their approach and implementation.

| Feature | Databricks Unity Catalog | AWS Glue | Apache Atlas |

| Core Metadata Management | Unified lakehouse metadata | AWS-focused metadata store | Multi-source metadata repository |

| Integration Capabilities | Native Databricks integration | AWS service integration | Hadoop ecosystem focus |

| Lineage Tracking | Column-level, real-time | Table-level only | Entity-level relationship tracking |

| Access Controls | Fine-grained access controls | RBAC | Basic RBAC |

| Data Quality & Profiling | AI-powered monitoring | Basic profiling | Manual profiling |

Unity Catalog revolutionizes metadata management through its unified lakehouse architecture, bridging data lakes and warehouses with a three-level namespace structure. Its architecture enables centralized governance across Databricks workspaces. AWS Glue focuses on AWS ecosystem integration, using crawler-based systems to automatically catalog data sources. Apache Atlas offers a flexible approach with custom metadata models, though this flexibility increases setup complexity.

Unity Catalog leverages Delta Sharing for secure data sharing across workspaces and organizations. AWS Glue excels within the AWS ecosystem but may need additional configuration for external tools. Apache Atlas requires custom connectors for modern cloud services but allows extensive customization.

Unity Catalog provides real-time, column-level lineage tracking, enabling immediate detection of data quality issues. AWS Glue tracks table-level dependencies, primarily focusing on ETL jobs within AWS services. Apache Atlas can capture complex relationships but needs more manual configuration and lacks automated tracking

Unity Catalog implements SQL-based permissions with fine-grained control. AWS Glue uses AWS IAM, offering powerful but complex security controls. Apache Atlas provides basic role-based access control but often requires supplementary tools.

Unity Catalog features AI-powered monitoring for automated quality management. AWS Glue offers basic profiling through DataBrew. Apache Atlas requires third-party tools for comprehensive quality management.

Organizations should consider their existing infrastructure (AWS users might prefer Glue), governance requirements (Unity Catalog offers sophisticated controls), and team expertise (Atlas requires specialized knowledge). Unity Catalog handles complex deployments effectively, while Glue works best for AWS-centric operations, and Atlas serves those needing high customization flexibility.

As Databricks grew beyond its initial purpose, Unity Catalog was created as the solution. In order to streamline the various product offerings within their ecosystem, Databricks introduced the Unity Catalog to eliminate third-party integrations, particularly in the realm of data governance. We feel this has been tremendously well executed and as Unity Catalog comes free and installed by default for all new Databricks Data Intelligence Platform users, we feel it’s highly advantageous to maximize its utility, particularly for data governance, lineage and data discovery.

Related Case Study:

Learn how an AdTech company saved 300 eng hours, met SLAs, and saved $10K with Gradient

Save on compute while meeting runtime SLAs with Gradient’s AI compute optimization solution.

From chaos to clarity: How Gradient Insights saves your budget (and sanity)

Kartik Nagappa

Kartik Nagappa

From chaos to clarity: How Gradient Insights saves your budget (and sanity)

Unleashing the power of Declarative Computing

Noa Shavit

Noa Shavit

Unleashing the power of Declarative Computing

Gradient introduces cloud and Databricks cost breakdowns

Kartik Nagappa

Kartik Nagappa

Gradient introduces cloud and Databricks cost breakdowns