A new approach to managing compute resources: Insights from Sync

Today, data engineers face considerable challenges optimizing the performance of their data pipelines while managing ever rising cloud infrastructure costs. On a recent episode of the Unapologetically technical podcast, Sync Co-founder and CEO, Jeff Chou, shed light on the innovative solutions that are reshaping how we approach these challenges.

Read on for the key insights, or watch the full interview with big data expert, Jesse Anderson, below:

Bridging the technical-business divide

One of the most significant challenges in the data world is the gap between technical execution and business objectives. Data engineers often find themselves caught between the technical intricacies of managing data pipelines and the business objectives set by leadership. This gap can lead to misaligned priorities and over-provisioning of resources that could cost the company dearly.

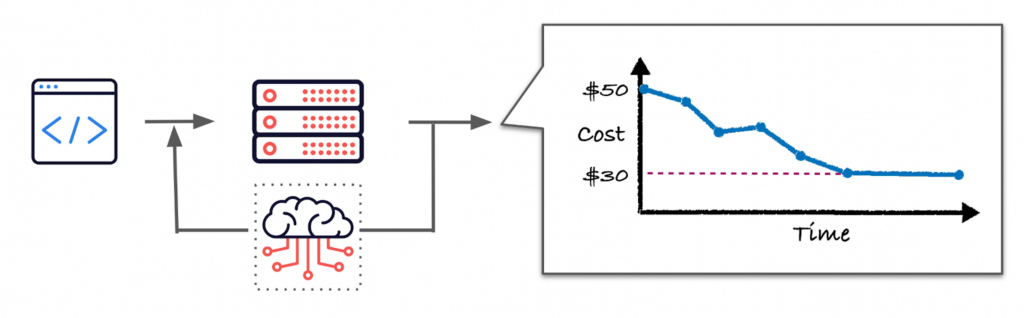

At Sync, we believe in addressing challenges head-on, which is why we built Gradient to help organizations work toward both technical and business goals. Gradient’s ML-powered compute optimization engine helps organizations reduce compute costs and/or improve workload performance.

Our approach to compute optimization is 100% goal-oriented. Rather than having data engineers go through the trials-and-errors of cluster tuning to meet requirements, Gradient uses those requirements to automatically recommend the optimal configuration for your jobs. We call this approach “Declarative Computing,” a play on declarative programming.

Declarative Computing: A paradigm shift in compute management

Declarative computing is a way to provision computing infrastructure based on desired outcomes, such as cost, runtime, latency, etc. Typically, resources are provisioned and workload performance is a result of the available compute resources. With declarative computing, cost/performance is the input that determines which resources are provisioned, so this approach represents a significant shift in cloud resource management.

Gradient uses this approach to help organizations meet their goals. By default Gradient optimizes for cost savings. However, you can input your desired runtime SLA for each workload, and Gradient will set the optimal configuration to meet that SLA at the lowest price possible.

The results are:

- Reduced costs: An end to overpaying for over-provisioned clusters. Gradient will find the optimal configuration for your requirements. Customers typically see an up to 50% reduction in their Jobs compute costs.

- Improved performance: Control job runtimes and consistently meet your SLAs. Gradient fine tunes clusters to meet your desired runtime SLAs at the most efficient manner. It also shows you the tradeoffs between cost and performance, if and when they apply.

- Time savings: Tuning clusters is time consuming, undifferentiated work. With Gradient, you can unburden your data engineers from this task, so they can contribute to your core offering rather than manage resource allocation details.

- Adaptability: Gradient continuously monitors and optimizes your jobs so that it can automatically adapt to changes in your data pipelines, including variable-sized data. This ensures your data infrastructure is always optimized to your goals.

Why you should care

Gradient offers more than just cost savings. It’s your single pane of glass for your data processing infrastructure. It ensures you consistently meet your critical runtime SLA, and improves productivity by automating cluster management. But most importantly, it ensures that technical execution aligns with business objectives, such as cost reductions or runtime SLAs. The result is a more efficient workflow that directly contributes to the organization’s bottom line.

In addition, by leveraging advanced models that are automatically fine tuned to each of your jobs, Gradient unlocks the ability to optimize your data infrastructure at scale. With Gradient, a single engineer can optimize thousands of jobs, with visibility and control over each job’s costs and performance metrics.

Deep dive: Compute cluster cost optimization

Spark cluster cost optimization is key to Gradient. Based on years of research, dozens of design partners, and many many petabytes of data Gradient offers opinionated monitoring of Spark metrics, focusing on the critical factors that impact performance and costs.

Here are a couple of those key factors:

Data Skew

Data skew is a common challenge, often leading to uneven workload distribution. Gradient automatically changes cluster sizes for optimal performance despite data skew. This can lead to significant savings, especially if your data is highly skewed (Gradient will automatically reduce the cluster size to improve efficiency).

Effective use of spot instances

Spot instances can significantly reduce cloud computing costs, as they can be up to 90% cheaper than on-demand clusters. However, spot instances come with the risk of job failure, as nodes can be evicted at any time without notice.

By leveraging spot instances effectively, data teams can achieve substantial cost savings without compromising on job reliability or performance. We’re working on ways that Gradient can help organizations maximize the benefits of spot instances, while mitigating their drawbacks, including:

- Optimizing clusters based on the statistical runtime variations caused by Spot instances

- Displaying the Spot vs. On-Demand breakdown when users use the “Spot with Fallback” option in Databricks

Adapting to varying data sizes

Data size fluctuations can lead to over- or under-provisioning of resources if not handled properly. This could result in compute cost increases, unmet runtime SLAs, and even failed job runs.

Gradient automatically adapts to varying data sizes by:

- Changing cluster sizes based on input data size and job complexity

- Using historical data to predict resource requirements for different data sizes

- Utilizing statistical historical information to provide optimal clusters even with “noisy” data sizes

This adaptive approach ensures optimal resource utilization regardless of data size variations, leading to consistent performance and cost-efficiency.

Conclusion

By bridging the gap between technical execution and business objectives, leveraging the power of machine learning / AI , and providing sophisticated tools for cost and performance optimization, Gradient by Sync enables data teams to achieve more with their resources.

As data volumes continue to grow exponentially, Gradient helps organizations manage their data infrastructure efficiently, leveraging the power of machine-learning technology. It is an intelligent, adaptive system that can automatically manage and optimize compute resources to meet business objectives, such as desired runtimes or costs.

If you’re interested in learning more, schedule a personalized demo here.

More from Sync:

From chaos to clarity: How Gradient Insights saves your budget (and sanity)

Kartik Nagappa

Kartik Nagappa

From chaos to clarity: How Gradient Insights saves your budget (and sanity)

Unleashing the power of Declarative Computing

Noa Shavit

Noa Shavit

Unleashing the power of Declarative Computing

Gradient introduces cloud and Databricks cost breakdowns

Kartik Nagappa

Kartik Nagappa

Gradient introduces cloud and Databricks cost breakdowns