Meet Slingshot

Maximize your cloud data platform efficiency

With transparency into your financial and compute spend, you can track and forecast use more accurately, maximizing your investment—including your AI programs.

Key benefits

Optimize & do more

Cost efficiencies let you invest in new business uses.

Gain visibility

Transparency helps you intelligently manage resources.

Manage your way

Federated management lets you tailor to your policies.

Hear from our customers

Supported platforms

Optimization hub

Databricks & Snowflake automated optimization

Leverage tried and true optimizations for compute, queries, and data storage. Click to apply, or select workloads to fully automate.

Advanced ML models

ML-powered optimization for Databricks Jobs

The ease of serverless without the limitations. Slingshot's advanced ML models provide custom-tailored optimization for Databricks Jobs.

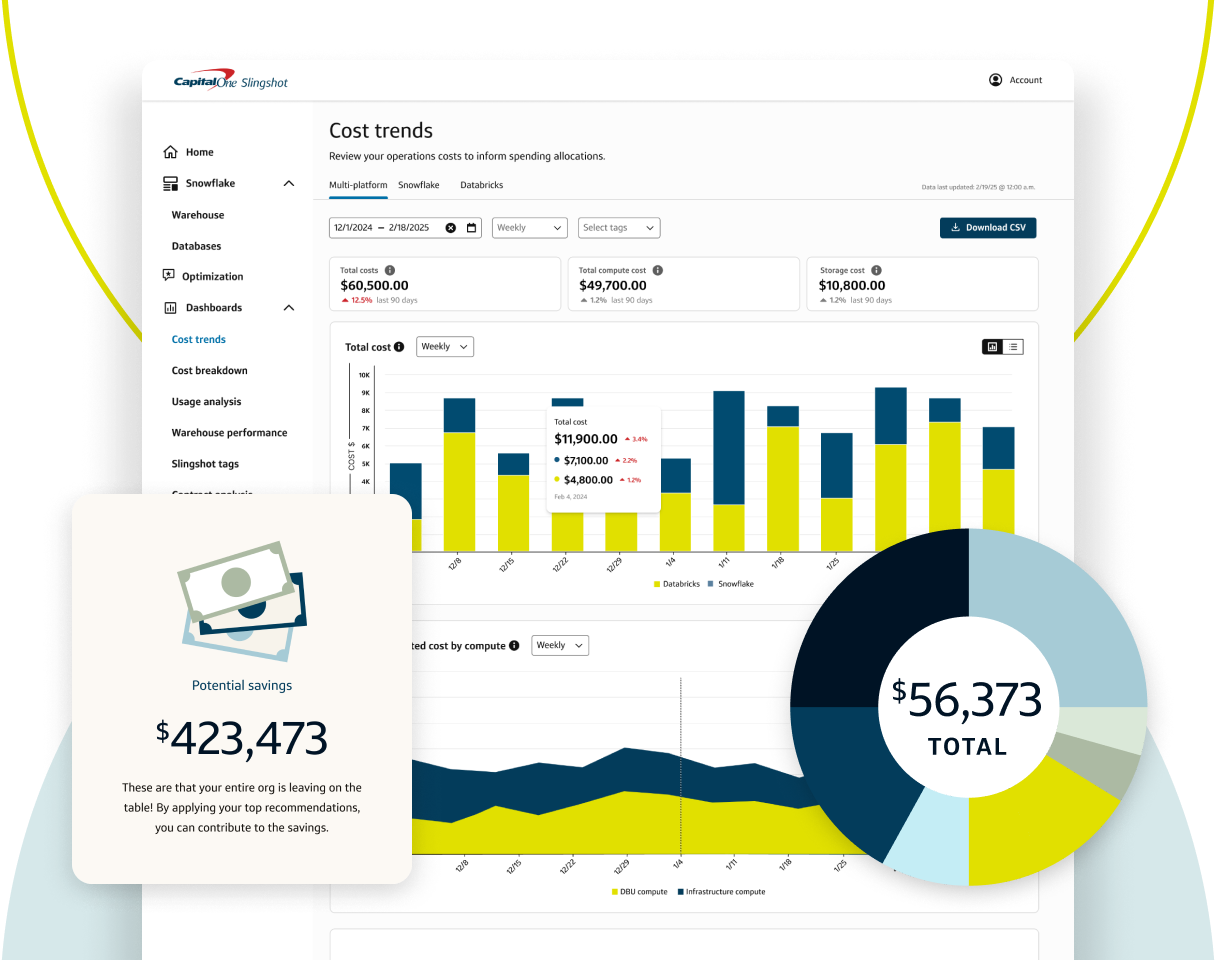

Data visualization

Visualizations and insights for data clouds

Allocate costs to LOBs, assess performance and uncover inefficiencies and opportunities to save up to 40% from Slingshot optimization.

Governance & IAM

Governance and federation for Databricks & Snowflake

Built internally out of need, Slingshot was created to enable the modern enterprise to manage massive amounts of data.

Solutions

Related content

Health check

Free dashboard to guide your Databricks optimization efforts

Assess the health of your Databricks environment across Serverless, Jobs, DBSQL, APC and DLT with our powerful, free dashboard.

Slingshot next steps

Start your free trial

Visit the Snowflake Marketplace today to see how Slingshot can help you maximize your Snowflake investment.

Snowflake optimization guide

Learn Snowflake optimization techniques for balancing cost and performance at scale.

Slingshot Frequently Asked Questions

How do I know that the Snowflake recommendations applied are actually saving money?

The value report contains a detailed history of cost savings generated by Slingshot recommendations. The cost savings can be viewed by all recommendations as well as per recommendation applied.

Additionally, the warehouse details page visualizes the cost over time and will include a change history so that you can see why there were cost changes.

What types of Snowflake cost optimizations does Slingshot cover?

Slingshot optimizes the following objects in Snowflake:

- Schedules for warehouse size, cluster size and auto-suspend

- Warehouse statement timeout

- Just-right time travel provisioning

- Ideal COPY file sizes

- Drop unused tables

How are warehouse schedule recommendations formulated?

Slingshot analyzes the workload and query attributes of a warehouse. It tracks factors such as, but not limited to, query size, query load, queued queries and data spillage. Based on these usage patterns, Slingshot generates recommendations that will optimize the Snowflake resources.

Do recommendations support Warehouses with QAS?

Yes, we analyze warehouses that have Query Acceleration Services (QAS) enabled and provide recommendations.

Additionally, in some cases, we can enhance QAS cost-efficiency by adjusting the time slots in the scheduling recommendations to account for delayed queries or queries that don't meet the eligibility criteria.